How to Request Indexing in Google Search Console

Last updated 8/29/2023

The problem or issue:

Page indexing issues for:

Crawled — currently not indexed

Discovered — currently not indexed

TL;DR

If you’re experiencing not having some of your URLs getting indexed by Google (mostly blog posts), then this tutorial is for you! This tutorial covers 3 phases:

Phase 1: Deleting Hidden Demo Content

Sometimes frustration can lead to unexpected silver linings! In this case, I was starting to wonder why some of my blog posts weren’t getting indexed by Google. I had just launched my website in May of 2023, so I wasn’t super worried yet, but it was July and that meant I was 2 months post-launch.

It was odd that some blog posts URLs were either “Discovered - Not Currently Indexed” or “Crawled - Not Currently Indexed” in my Google Search Console Page Indexing reports.

I had only spent about a few days digging into this issue trying to figure out what was happening when I stumbled across a blog post from Jen with Jen-X Website Design & Strategy.

The silver lining to this was getting to know Jen better! We really hit it off while we discussed approaches to fixing this issue and compared notes. She’s a lot of fun and I love her brand!

We interrupt this blog post to present a very special award to Jen for BEST FREAKING BUSINESS NAME EVER!

Anyway, as I was saying, Jen posted a link to her blog post “Solution: This is Why Google Isn’t Indexing Squarespace Blog Posts” in a Facebook Group, and I immediately started following her tutorial to see if this may be the root of my problem.

In her post, Jen outlines how she used the SEOspace plugin and realized she had pages that weren’t being indexed. I had come to the same conclusion using Google Search Console.

In short, she goes over Squarespace settings you should look for in your post for Sharing to Google Search Console, as well as demo content being generated for any image placed in a Classic Editor section (in this case, all blog posts use the Classic Editor so this is definitely going to impact any blog post that has an image added to it).

Watch her video here to learn how to take these steps and view your sitemap to check for demo content!

Once you’ve located and deleted all of your demo content, it’s important to wait at least 24 hours before taking the steps outlined in Phase 2. Jot down the date and time you completed Phase 1.

So how do you know your sitemap has been updated?

Has it been at least 24 hours? If yes, go to step 2.

While viewing your Sitemap (your Squarespace sitemap is www.yourdomain.com/sitemap.xml), what is the Last Modified date for your page or collection? Is the date correct? If not, reach out to Squarespace Support to request a manual resubmission of your sitemap (if it’s been 24 hours).

After Support submits your sitemap, check again to make sure the Last Modified date is correct AND that all instances of Demo Content have been removed*

*Sometimes Demo Content doesn’t Interfere

When looking at my sitemap, I can see a blog post URL that’s showing demo content on several images and in this particular instance it’s completely fine!

Why is that? Because I’m demonstrating formatting tips for blog posts in 7.1, and have demonstrations of image design options, and so yes, I want to keep the demo content because it’s obviously demo content.

Another important note is that the blog post URL is properly indexed by Google. I can easily confirm this by pasting in the URL for this blog post into the URL inspection field at the top like so:

In short, if you see demo content for a page that is currently indexed by Google – then no worries! There’s no problem to fix in this instance. Ultimately, I do agree with Jen that I think demo content had an impact on why some of my blog posts weren’t getting indexed.

You only need to take the steps outlined in Phase 2 & 3 if the URL that’s not indexed should indeed be indexed - more on that below!

Phase 2: Review and Export Indexing Reports

This link in Google Search Console’s help center will give you a great overview of what the Page Indexing Report tells you.

Important takeaways:

Not every URL should be indexed. Think of hidden pages, or pages you’ve set up for the sole purpose of adding files for freebie downloads, category and tag pages that get generated from blog collections, etc.

Visit that page to read more about each “indexing reason.” This post will be going over two specific reasons and how to proceed with manually requesting indexing:

Crawled - currently not indexed

Discovered - currently not indexed

Create a Working File for URLs

In Google Search Console, on the left choose Pages under Indexing

Click on Crawled - currently not indexed

At the top, click on Export > Google Sheets

Prep the exported Google Worksheet:

View the 3rd worksheet, titled Metadata > this contains the issue, in this case “Crawled - currently not indexed.” Copy this text and rename the worksheet along with the date you pulled the report like so:

Crawled - currently not indexed - 08-18-2023View the 2nd sheet titled Table > this contains all the URLs in the report.

Sort the sheet by URLs (A to Z)

Eliminate all rows with the following in the URL:

/tag

RSS

amp

/category

You should be left with potentially viable URLs to focus on

Add a header for NOTES in the last column on this sheet – this is where you will note “Requested indexing mm/dd/yyyy” or other issues that you discover such as 404 Not Found (more on these details below).

Repeat these steps above for the Discovered - currently not indexed report.

Now we have two working files to keep track of our progress! This will be especially helpful since you are limited to submitting 10 individual URLs a day for reindexing (those steps are outlined in Phase 3).

Phase 3: Testing each URL and Request Indexing

By far the easiest way to work through this list is simply copying the URL in your worksheet, and pasting into the URL Inspection tool found at the top of the Search Console.

The next thing Search Console shows you is the result of this inspection. It looks like this, but don’t stop here! The next step is to click on the button “TEST LIVE URL” as shown below.

Once you click on “TEST LIVE URL” (which may take a minute), you will see if this URL is eligible for indexing or not – examples of both are shown below.

How do I know if a URL is indexable?

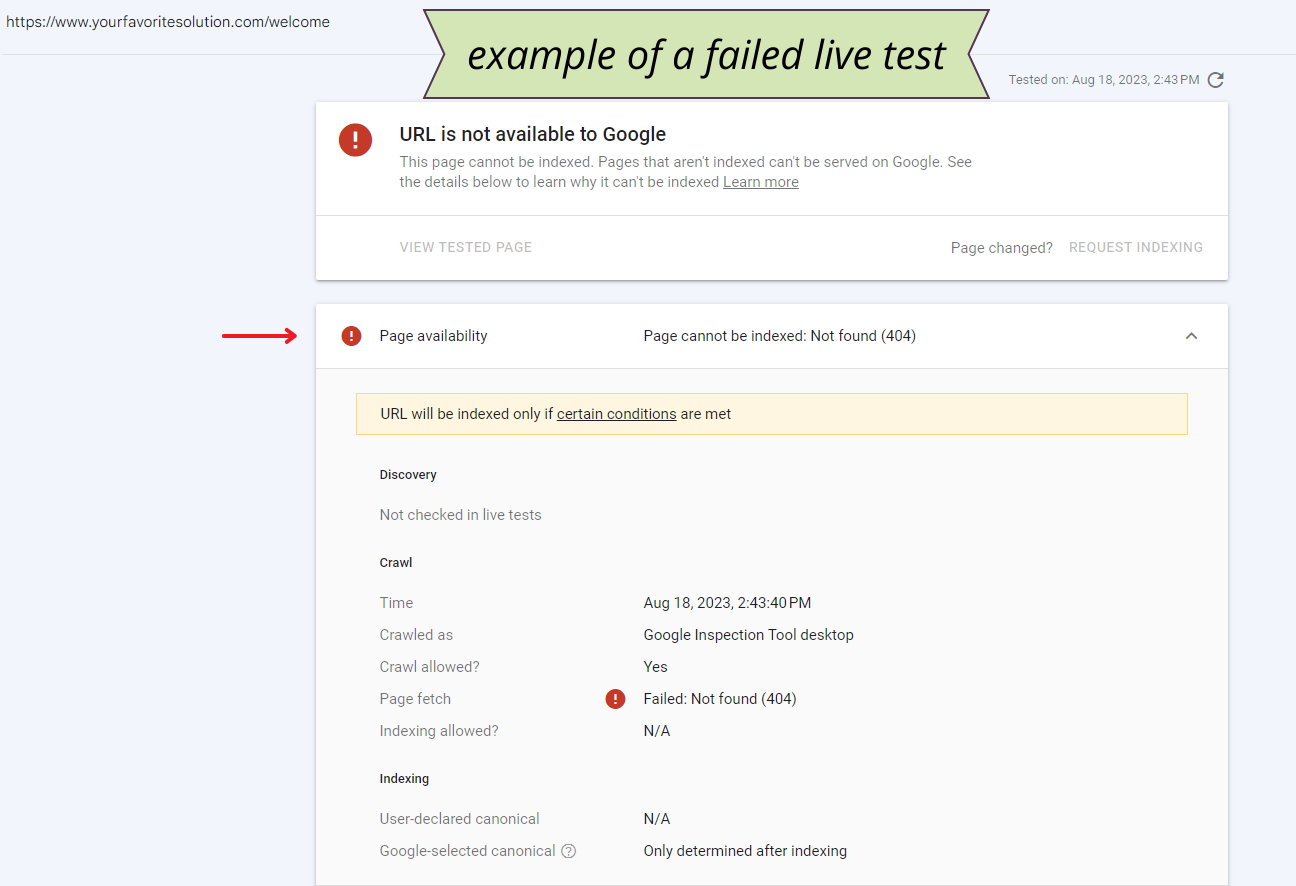

If you see Green check marks on this report then congratulations! This URL exists and is eligible for indexing. But, if you see a report like the one below with the Red Circle and Exclamation Mark, you can ignore this URL for fixing (unless it was a valid URL and you need to redirect to a new or different page – more on that in another post).

In this example, the URL was created early on in my website build. The page itself no longer exists and I have no need to add a redirect for this. Because this URL doesn’t exist, I get this kind of result after clicking “TEST LIVE URL:”

Not Indexable:

When you click on “TEST LIVE URL” for a valid URL, you will see a result that looks more like this one:

Now that we’ve confirmed this URL is valid, click on “REQUEST INDEXING” as shown in the screenshot above. Add a note in your worksheet that you “Requested indexing mm/dd/yyyy” so you can revisit and track your progress.

At this point, you have to wait, and yes it can feel like an eternity! It could take up to a week for Google to recrawl those submitted URLs and (hopefully) index the URLs you submitted.

Resubmitting requests to index a URL will not speed up the process. At the time of publishing this post, I’ve been waiting 5 days, and anticipate it being a full week at minimum. I’ll update this post with the results!

Phase 4: Evaluating Results

Here’s a timeline of events for this tutorial and an update!

August 18, 2023: Requested indexing for 5 URLs (all 5 were from my Squarespace Release Tracker blog collection)

August 22, 2023: Sitemap was crawled by Google Search Console

August 25, 2023: Checked on the status of the URLs I requested indexing for:

2 of the 5 were indexed

The other 3 are probably considered “low-quality” due to having very little content. I knew this was a possibility with having my own Release Tracker, and I’m ok with that!

1 of the 3 that wasn’t indexed was used in this demonstration.

August 26, 2023: I clicked on the “VALIDATE FIX” button to see if that would spur any changes

When I view this report in Search Console, it shows me the message: “Validation started” with a date of 8/26/23

August 27, 2023: I received an email from Google Search Console notifying me that “some fixes failed” for my validation request on this report; when I clicked on “View Issue Details” it listed 2 of the URLs that I was trying to index and a new URL from my main blog.

Inspecting this new URL showed me that the URL was indeed on Google and that it was indexed.

Takeaway: Google Search Console is cumbersome and and you sometimes have to really dig into individual URLs to determine what’s actually going on.

The Validation Request itself is unfinished as of August 29, 2023! The way Google handles these emails can be frustrating.

As of August 29, validation is not yet complete.

August 27, 2023: Google recrawled the site map

So what does all of this mean?

Troubleshooting steps come down to when your sitemap was last crawled in relation to the dates you take when troubleshooting.

Example:

Published after last sitemap crawled date: If you publish a post on August 1, and your sitemap was last crawled on July 27, then this new blog post may not get indexed until the sitemap is recrawled

Published before last sitemap crawled date: If you publish a post on August 1, and your sitemap is crawled on August 3, and your new blog post is showing “not indexed” then you should revisit the steps outlined in Phase 1-3 of this post.

Variables that can impact indexing with Google:

Low quality or low content (for example, my posts in the Release Tracker are intentionally short and to the point. My long-term goal is to build opportunities for cross-indexing with other blog posts and services).

The younger your site, the longer it can take for Google to crawl or recrawl; I don’t have hard data to back this up just real-world experience, but I feel like it can take at least 30 days before you may see frequent crawling

If you do not have your site connected to Google Search Console, that is an absolute must for troubleshooting errors.

How to Prioritize Indexing Issues in Google Search Console:

URLs that are valid and important to your website (such as a well-written blog post or the main page of your website) deserve more attention and troubleshooting

“Crawled - currently not indexed” is more important than “Discovered – not currently indexed” because the first (Crawled) means Google has crawled it but hasn’t indexed it for some reason, whereas the second (Discovered) means Google plans on coming back to this page. I successfully reduced my original count of 22 URLs in the “Discovered” report to 1 after requesting validation. The one remaining URL is perfectly fine not being indexed.

Search Console Indexing Issues You Can Ignore

There are two common and very benign issues you will see in Search Console for your Squarespace website.

When you see these 2 issues for your home page:

Page with redirect

Alternate page with proper canonical tag

These are related to how Squarespace handles your home page. The home page itself has a dedicated URL (page slug) just like any other page; however, when you set a page to Home, the system automatically redirects to the primary domain. So instead of seeing www.domain.com/home on the home page, you only see www.domain.com.

Excluded by ‘noindex’ tag

If you’ve chosen to hide your tag and category pages in your blog post settings, then the majority of these URLs will come from that. You can toggle those in the Blog settings > under SEO like so:

Similarly, other pages you may have purposefully hidden from search engines will be listed here. If you see a URL that should not be here, then check your page settings.

Blocked by robots.txt

Most likely, this is due to you hiding or disabling a feature. For my website, I chose to hide author profiles for now, so that is appearing here in this report.

Soft 404

Ironically, my custom 404-error page shows up here, and that’s perfectly fine since I don’t need this page to be indexed!

As with any report, it’s important to review the URLs being shown in each report and evaluating them individually.

Search Console Indexing Issues You May Need to Review

Not found (404)

If the URL was an active page or post, be sure to check that you have a 301 redirect in place for it (or 302 if this is temporary). Otherwise, this may have been the result of a page that was created, inadvertently indexed, and then later deleted because you didn’t need it. In this case, there are no issues!

Thanks for reading and don’t forget to comment below to let us know what your experience has been with this or if you have any questions!

Looking for additional help?

If you continue to have indexing issues with Google Search Console, book some time with me! I’m offering a 10% off coupon code just for troubleshooting this issue - use SITEMAPHELP when booking (we suggest starting with a 1-hour or 90-minute Website Maintenance appointment).